Main Menu Toggle

Main Menu Toggle

We are the Department of Computer Science & Engineering

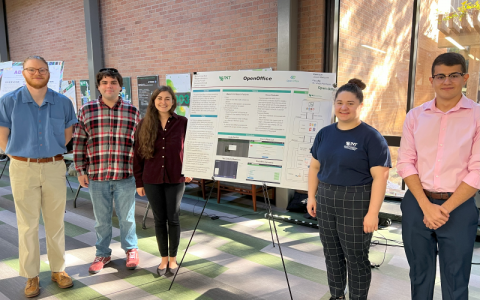

Our department is committed to providing high quality educational programs by maintaining a balance between theoretical and experimental aspects of computer science, as well as a balance between software and hardware issues by providing curricula that serves our communities locally and globally.

UNT CSE offers several undergraduate & graduate degree programs including a Bachelor of Art degree in Information Technology, Bachelor of Science and Master of Science degree in Computer Engineering, Bachelor of Science and Master of Science degree in Computer Science, Bachelor of Science and Master of Science degree in Cybersecurity, and a doctoral degree in Computer Science and Engineering. A Professional Master of Science degree in Computer Science is also offered.

The Computer Science program is accredited by the Computing Accreditation Commission of ABET, http://www.abet.org.

The Information Technology program is accredited by the Computing Accreditation Commission of ABET, http://www.abet.org.

The Computer Engineering program is accredited by the Engineering Accreditation Commission of ABET, http://www.abet.org.

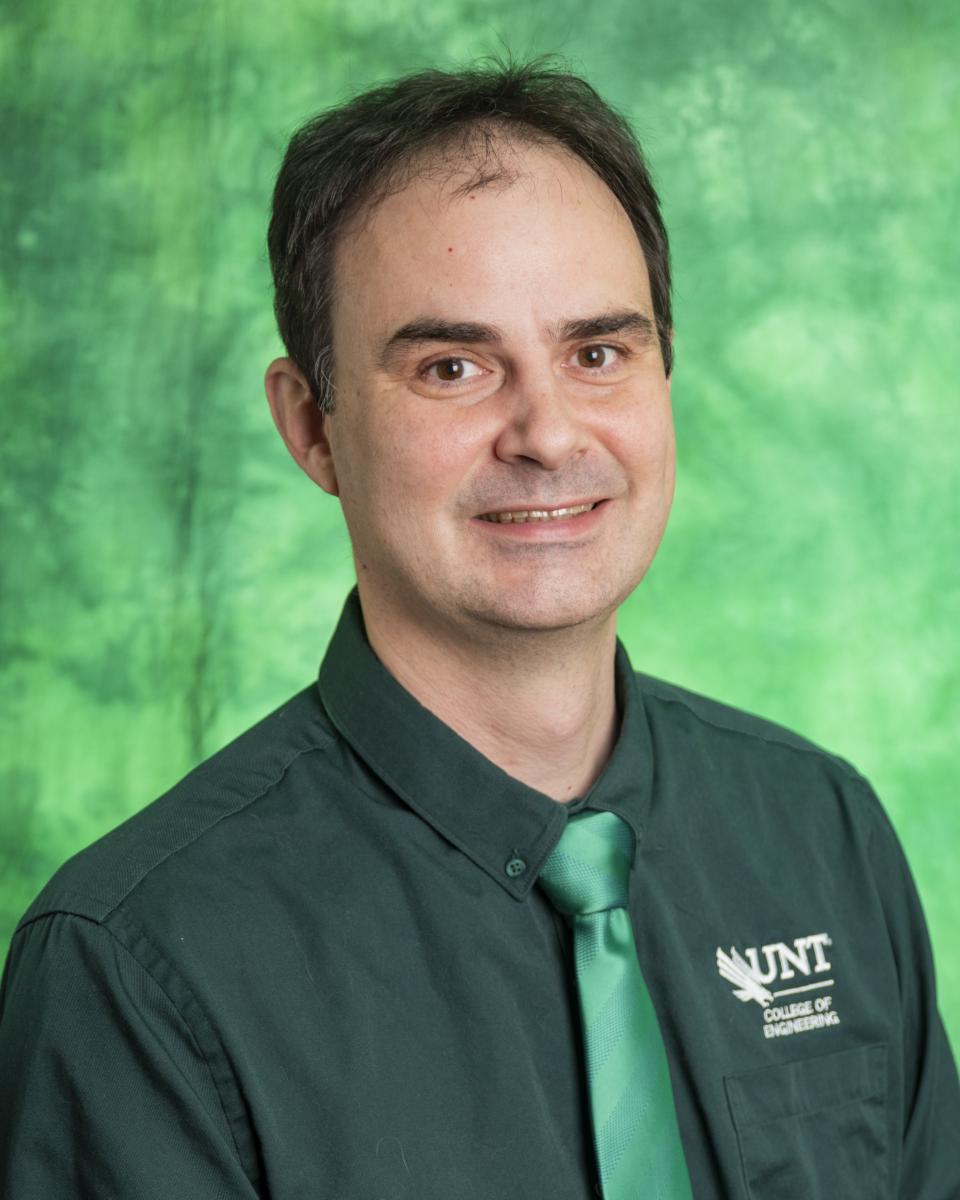

A message from our Department Chair

I would like to extend my warmest welcome to you as the Chair to the Department of Computer Science and Engineering at the University of North Texas. Computing has revolutionized our world as we know it in the past two decades and will continue to serve as the core of all technologies in the 21st century.

- Dr. Gergely Záruba